Climate-cooling aerosols can form from tree vapors

The cooling effect of pollution may have been exaggerated.

Fossil fuel burning spews sulfuric acid into the air, where it can form airborne particles that seed clouds and cool Earth’s climate. But that’s not the only way these airborne particles can form, three new studies suggest. Tree vapors can turn into cooling airborne particles, too.

The discovery means these particles were more abundant before the Industrial Revolution than previously thought. Climate scientists have therefore overestimated cooling caused by air pollution, says atmospheric chemist Urs Baltensperger, who coauthored the three studies.

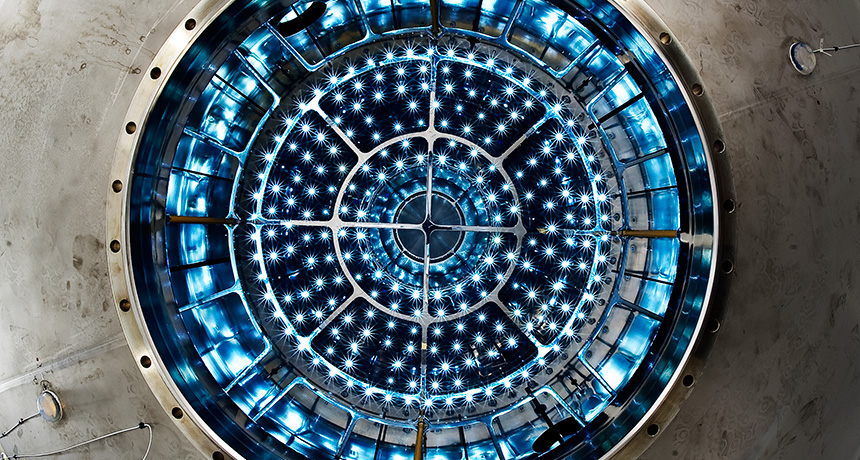

Simulating unpolluted air in a cloud chamber, Baltensperger and colleagues created microscopic particles from vapors released by trees. In the real world, cosmic rays whizzing into the atmosphere foster the development of these particles, the researchers propose in the May 26 Nature. Once formed, the particles can grow large enough to form the heart of cloud droplets, the researchers show in a second paper in Nature. After sniffing the air over the Swiss Alps, some of the same researchers report in the May 27 Science the discovery of the particles in the wild.

“These particles don’t just form in the laboratory, but also by Mother Nature,” says Baltensperger, of the Paul Scherrer Institute in Villigen, Switzerland.

Airborne particles, called aerosols, are microscopic bundles of molecules. Some aerosols start fully formed, such as dust and salts from sea spray, while others assemble from molecules in the atmosphere.

Since the 1970s, scientists have suspected that sulfuric acid is a mandatory ingredient for aerosols assembled in the air. Sulfuric acid molecules react with other molecules to form clusters that, if they grow large enough, can become stable. Human activities such as coal burning have boosted sulfuric acid concentrations in the atmosphere, subsequently boosting the abundance of aerosols that seed clouds and reflect sunlight like miniature disco balls. That aerosol boost partially offsets warming from greenhouse gases.

A cloud chamber at the CERN laboratory near Geneva allowed Baltensperger and his collaborators to simulate the atmosphere when sulfuric acid was scarce. The researchers added alpha-pinene, the organic vapor that gives pine trees their characteristic smell, to pristine air and watched for growing aerosols. Previous, though inconclusive, work suggested that the pine vapors might form aerosols.

Alpha-pinene molecules reacted with ozone in the air and formed molecules that reacted and bundled together to form aerosols, the researchers observed. The researchers added an extra layer of realism by using one of CERN’s particle beams to mimic ions from the cosmic rays bombarding Earth’s atmosphere. The “rays” led to the formation of as many as 100 times the number of aerosols. The added ions help stabilize the growing aerosols, the researchers propose.

Further testing showed that the newborn aerosols can rapidly grow from around 2 nanometers wide — roughly the diameter of a DNA helix — to 80 nanometers across, large enough to seed cloud droplets.

At a research station high in the Swiss Alps, researchers observed aerosol formation during atmospheric conditions with low sulfuric acid concentrations and abundant molecules akin to alpha-pinene. The researchers couldn’t confirm the rapid growth seen in the lab, though.

Quantifying the overall climate influence of fossil fuel burning in light of the new discovery will be tricky, says Renyi Zhang, an atmospheric chemist at Texas A&M University in College Station. “Atmospheric processes are complex,” he says. “They had a pure setup, but in reality the atmosphere is loaded with chemicals. It’s hard to draw direct conclusions at this point.”