Protein mobs kill cells that most need those proteins to survive

Joining a gang doesn’t necessarily make a protein a killer, a new study suggests. This clumping gets dangerous only under certain circumstances.

A normally innocuous protein can be engineered to clump into fibers similar to those formed by proteins involved in Alzheimer’s, Parkinson’s and brain-wasting prion diseases such as Creutzfeldt-Jakob disease, researchers report in the Nov. 11 Science. Cells that rely on the protein’s normal function for survival die when the proteins glom together. But cells that don’t need the protein are unharmed by the gang activity, the researchers discovered. The finding may shed light on why clumping proteins that lead to degenerative brain diseases kill some cells, but leave others untouched.

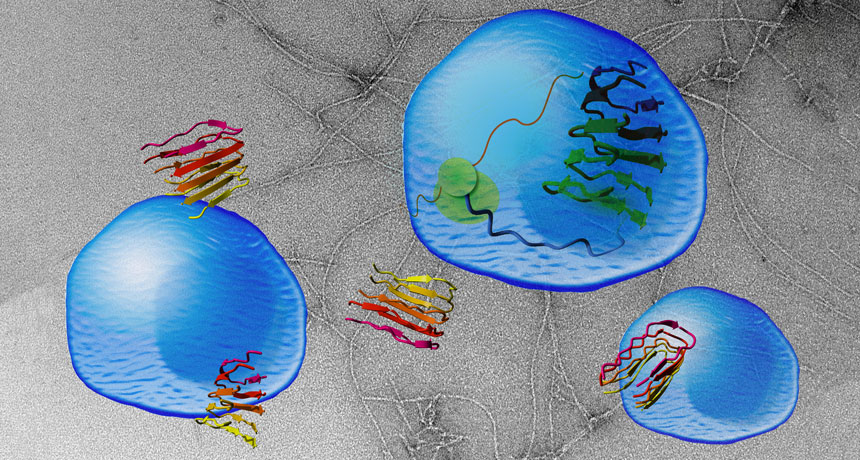

Clumpy proteins known as prions or amyloids have been implicated in many nerve-cell-killing diseases (SN: 8/16/08, p. 20). Such proteins are twisted forms of normal proteins that can make other normal copies of the protein go rogue, too. The contorted proteins band together, killing brain cells and forming large clusters or plaques.

Scientists don’t fully understand why these mobs resort to violence or how they kill cells. Part of the difficulty in reconstructing the cells’ murder is that researchers aren’t sure what jobs, if any, many of the proteins normally perform (SN: 2/13/10, p. 17).

A team led by biophysicists Frederic Rousseau and Joost Schymkowitz of Catholic University Leuven in Belgium came up with a new way to dissect the problem. They started with a protein for which they already knew the function and engineered it to clump. That protein, vascular endothelial growth factor receptor 2, or VEGFR2, is involved in blood vessel growth. Rousseau and colleagues clipped off a portion of the protein that causes it to cluster with other proteins, creating an artificial amyloid.

Masses of the protein fragment, nicknamed vascin, could aggregate with and block the normal activity of VEGFR2, the researchers found. When the researchers added vascin to human umbilical vein cells grown in a lab dish, the cells died because VEGFR2 could no longer transmit hormone signals the cells need to survive. But human embryonic kidney cells and human bone cancer cells remained healthy. Those results suggest that some forms of clumpy proteins may not be generically toxic to cells, says biophysicist Priyanka Narayan of the Whitehead Institute for Biomedical Research in Cambridge, Mass. Instead, rogue clumpy proteins may target specific proteins and kill only cells that rely on those proteins for survival.

Those findings may also indicate that prion and amyloid proteins, such as Alzheimer’s nerve-killing amyloid-beta, normally play important roles in some brain cells. Those cells would be the ones vulnerable to attack from the clumpy proteins.

The newly engineered ready-to-rumble protein may open new ways to inactivate specific proteins in order to fight cancer and other diseases, says Salvador Ventura, a biophysicist at the Autonomous University of Barcelona. For instance, synthetic amyloids of overactive cancer proteins could gang up and shut down the problem protein, killing the tumor.

Artificial amyloids might also be used to screen potential drugs for anticlumping activity that could be used to combat brain-degenerating diseases, Rousseau suggests.